Hello, I’m Ylli Bajraktari, CEO of the Special Competitive Studies Project. In this edition of our newsletter, SCSP’s Society Panel in collaboration with the Johns Hopkins Applied Physics Laboratory (JHUAPL) released today a Framework for Identifying Highly Consequential AI Use Cases.

Register to join SCSP in partnership with Foreign Policy magazine for a new virtual series, Promise Over Peril starting on November 8, 2023 at 10:00 AM EST.

We are now sharing new job opportunities to join the SCSP team. Learn more about two open positions, Director of Foreign Policy and Senior Director of Defense, on the career page on our website. Apply, share with your network, and continue to be a part of our important work. Thanks for following and believing in the mission.

A Framework for Identifying Highly Consequential AI Use Cases

AI will affect each one of us in some way. From AI-enabled chatbots, to AI assisted health care and personalized education, to integrating AI tools in our workplace and our schools, AI is here to stay. This is why the Biden Administration’s Executive Order on the Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence is not just a matter of policy but of personal significance to every American impacted by AI.

The United States’ approach to AI governance encompasses two overarching ideals: (1) we must harness the benefits of AI while mitigating the worst of the harms; and (2) we need to balance innovation with regulation. These ideals run through the White House Blueprint for an AI Bill of Rights, the NIST AI RMF, and most recently, the President’s Executive Order. What do these ideals mean in a practical sense? And how do we implement these ideals?

The best way is to take the most intuitive approach. We need to identify the AI outcomes that we definitely want and those we do not. Certainly, we cannot put effort into shaping every AI outcome. We need to determine which AI outcomes are the most important to us, the ones that align with our values and norms. And we need to provide adequate certainty for the public that AI will be developed and used according to these values and norms. That is the objective of the “Framework for Identifying Highly Consequential AI Use Cases” (HCAI Framework).

We believe the HCAI Framework developed by SCSP, in collaboration with the Johns Hopkins University Applied Physics Laboratory, provides a path forward. Our framework aims to be as dynamic and agile as AI itself. We recognize the futility of a static list of technologies or applications to identify high-stakes AI uses or classes of use, as the technology and its uses are in constant flux. Instead, our approach is to develop criteria that can adapt to the technology's rapid evolution and the changing contexts in which it is applied.

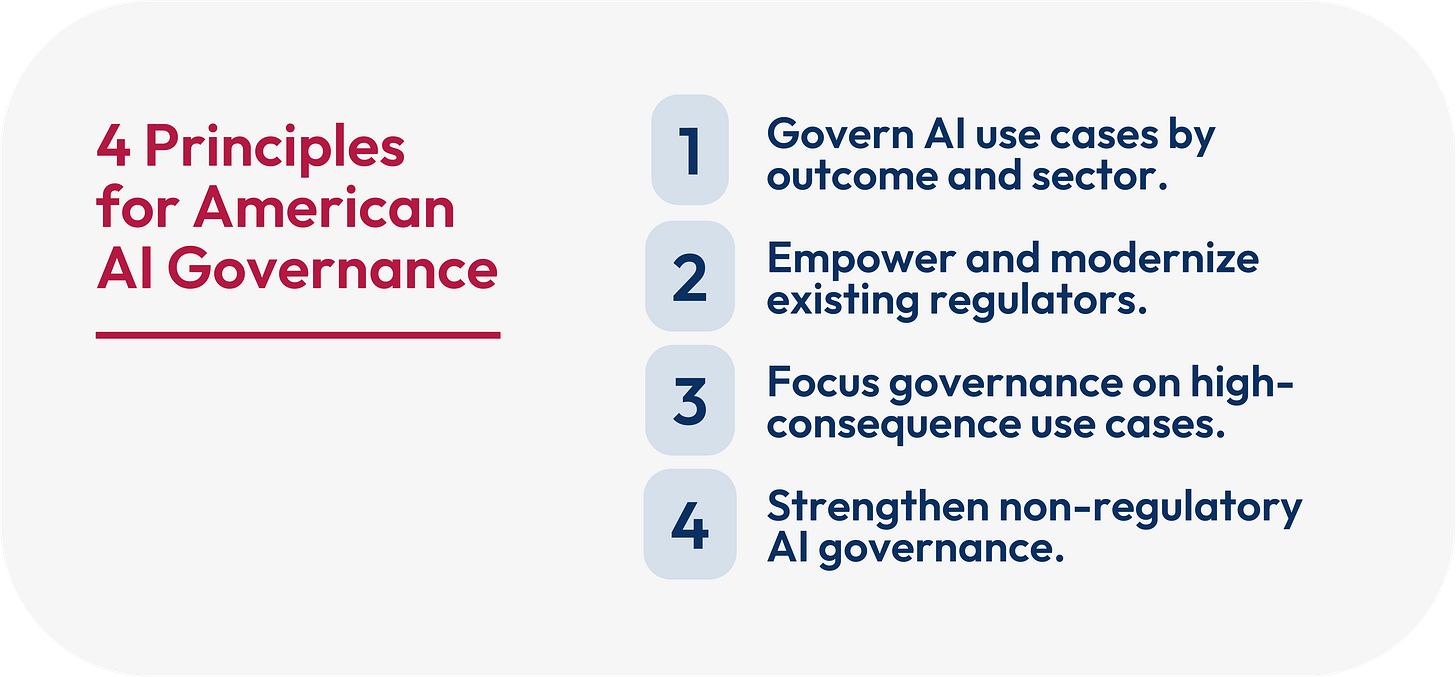

The framework is built upon the four AI governance principles outlined in our report, Mid-Decade Challenges to National Competitiveness. They are:

Sector specific AI governance: The risks and opportunities presented by AI are inextricably tied to the context in which it is used. Therefore sector-specific governance is the best approach to balancing interests to achieve optimal outcomes.

Empower and modernize existing regulators: The United States needs to empower and modernize its key regulators, and energize their engagement for the new AI era.

Focus governance on high consequence use cases: It is impractical to govern every AI use or outcome. Regulators should focus their efforts on AI uses and outcomes that will be highly consequential to society, including potential unintended uses, whether beneficial or harmful.

Strengthen non-regulatory AI governance: Strong, robust non-regulatory governance

mechanisms should be used in addition to regulatory guardrails to properly shape AI development and use to ensure flexibility, adaptiveness, and relevance.

There are three AI lifecycle points at which the framework can be applied: (1) Regulators foresee a new application for AI, (2) A new application for AI is under development or proposed to a regulatory body, and (3) An existing AI system has created a highly consequential impact that triggers an ex-post facto regulatory review.

Strategic Regulatory Focus

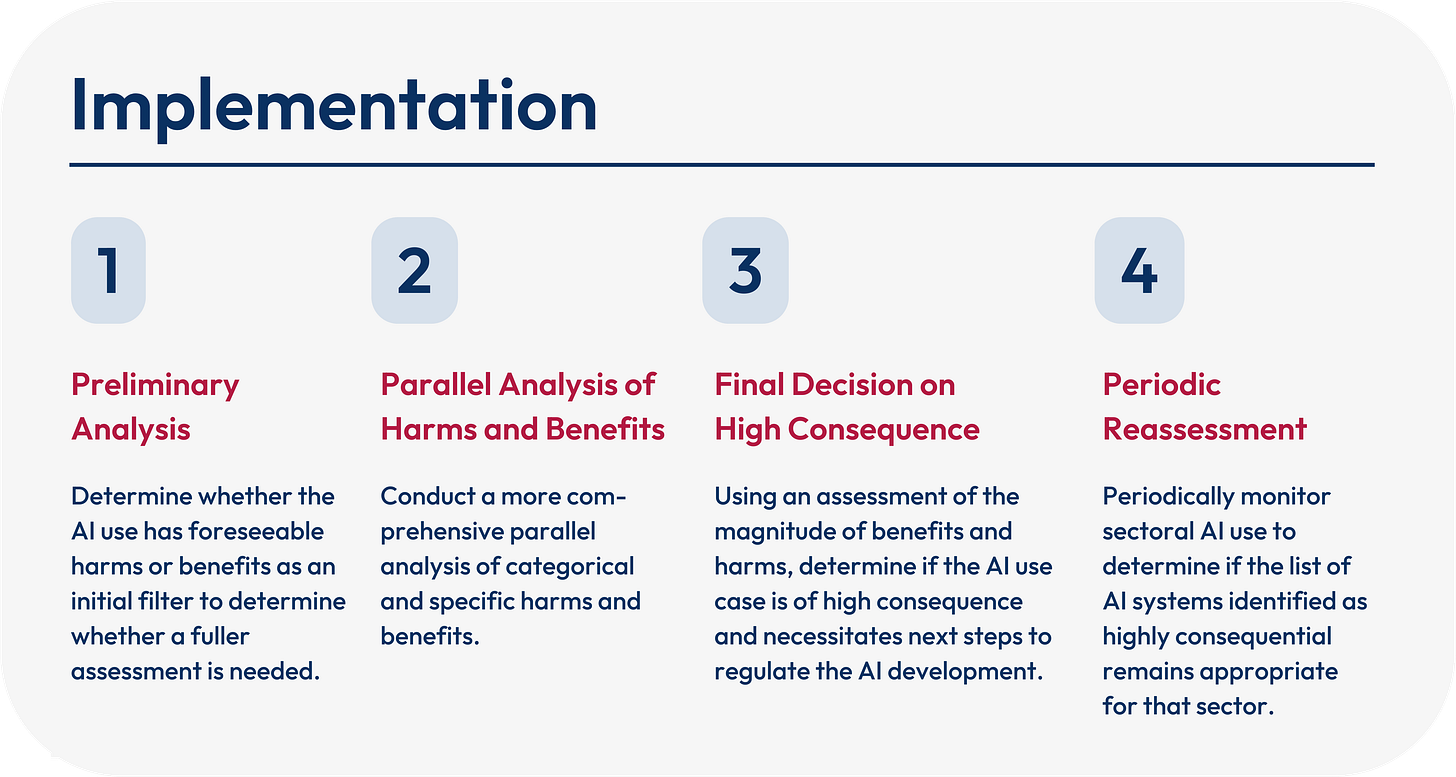

The framework introduces a methodical approach to determine whether an AI use case carries a high potential for significant societal impact and thus warrants further regulatory attention. The process is broken down as follows:

The framework sets out categories for potential harms and benefits, with illustrative examples of specific harms and benefits in each, and provides a systematic way to understand their magnitudes (e.g., probability and scope). The comprehensive assessment will inform whether the AI use is highly consequential to society meriting regulatory focus.

Dynamic and Transparent

By documenting every step and decision in the process, the framework promotes accountability and transparency that provide the public and industry certainty on the U.S. approach for identifying highly consequential AI use. Moreover, the framework suggests fostering public engagement, creating a public registry for evaluated AI use cases, and continuous monitoring.

The HCAI Framework is a living tool that adapts to sector-specific needs by embracing a risk-based and dynamic approach that aligns with American values.