Hello, I’m Ylli Bajraktari, CEO of the Special Competitive Studies Project. In this edition of 2-2-2, SCSP’s Society Panel provides an overview of their chapter of our recent report, Generative AI: The Future of Innovation Power. The link to the full Governance of Generative AI Memo can be found at the bottom of this newsletter. Reach out to info@scsp.ai with any questions!

Generative AI: The Future of Innovation Power Featured on SCSP’s NatSec Tech

Members of the SCSP team join Jeanne Meserve to summarize each memo from our fall report, “Generative AI: The Future of Innovation Power.”

In this episode, SCSP’s Jenilee Keefe Singer and Meaghan Waff summarize the Governance of Generative AI Memo.

GenAI is a Top Priority for Governance

This fall, we expect a series of important actions aimed at addressing the AI governance challenge. The forthcoming White House Executive Order is expected to provide guidance to federal agencies and industry and protect Americans’ rights and safety. The second AI Insight Forum led by Senate Majority Leader Schumer will continue to educate Congress on the technology, focusing this time on AI innovation. The UK AI Safety Summit will bring together international governments, industry, and civil society experts for a two day agenda on Frontier AI risks and safety. The European Union is expected to finalize their AI Act likely by end of year. These actions illustrate the momentous task of shaping AI technologies, domestically and globally.

The rapid evolution of generative artificial intelligence (GenAI) technologies, exemplified by groundbreaking proprietary models like ChatGPT and open source models like Llama and Falcon, is framing the AI landscape. As the barriers to accessing these cutting-edge AI capabilities diminish, we stand on the brink of unprecedented opportunities as well as substantial harms.

AI will change the very fabric of our national security, economy, and society. The transformative nature of this technology, like electricity, will accelerate all fields and domains. In most cases, AI is helping us solve some of our most pressing problems. For example, AI is transforming healthcare - both by improving diagnosis and by aiding researchers in the search for novel pharmaceuticals. It is reshaping education, allowing students young and old to access customized tutoring with nothing but a stable internet connection. Beyond these clear benefits, though, AI has the potential to be harmful. As an immediate concern, powerful AI tools can spread misinformation, and amplify long standing human biases. On the horizon, the misuse of advanced AI systems could lead to substantial consequences from generation of novel pathogens to runaway AI. To shape AI for the public good, we must both incentivize beneficial uses of AI and mitigate the worst of the harms as the technology advances.

Governance in the realm of AI is a delicate balance. To establish robust and effective mechanisms, the regulatory process must be meticulous, encompassing insights from a multitude of stakeholders and include a profound grasp of the technology's potentials and pitfalls. This takes time. This thoughtful deliberation is juxtaposed against the breakneck speed of AI advancements.

AI is advancing at the same time that the world order is contested. Leadership in this technology will decide national security, economy, and societal outcomes. To lead, the United States must continue to innovate.

The Governance of Generative AI Memo outlines a strategic roadmap, emphasizing urgent actions that should be taken over the next 12 to 18 months, and suggesting longer term actions. With the 2024 election cycle on the horizon, the potential surge in GenAI-driven disinformation poses a threat to the very core of our democratic principles. As billions globally prepare to cast their votes, the challenges presented by sophisticated synthetic media — be it deepfake imagery or manipulated audio — cannot be underestimated.

Further, the swift advancement and adoption of GenAI applications make addressing AI threats to democratic values such as privacy, non-discrimination, fairness, and accountability more urgent. The United States has long-established robust governance mechanisms that can be leveraged to address GenAI threats to our society. At the same time, the United States must explore new approaches and authorities to address needs that are not met by existing ones. In addition, given that GenAI crosses borders and jurisdictions, the United States and other nations need to explore international governance mechanisms for addressing global issues raised by GenAI.

Our imperative is clear. AI governance, both regulatory and non-regulatory, must keep pace with the state of the technology. The United States and likeminded democracies must lead the push to responsibly govern GenAI. SCSP’s governance memo articulates clear policy guidelines that can safeguard American democracy, domestic regulatory needs, and international norms, while harnessing the transformative potential of GenAI.

Recommended Actions

1. Domestic Elections Systems

One of the immediate GenAI concerns requiring U.S. attention is that of U.S. domestic elections. In 2024, over a billion people will be going to the polls globally. How the U.S. government and the American public interact with GenAI has the potential to impact elections, an integral component of the democratic process. While disinformation and misleading content in the runup to elections are not new, GenAI capabilities could accelerate the speed, volume, and precision of misinformation content like deepfakes.

The governance memo recommends three simultaneous actions around protecting U.S. digital information and elections systems. First, the National Institute of Standards and Technology (NIST) should convene industry to agree to a voluntary standard of conduct for synthetic media around elections in advance of the 2024 U.S. elections. To complement the standard of conduct, the U.S. government should work to scale public digital literacy education and disinformation awareness by: (1) assigning a lead agency to alert the public of synthetic media use in federal elections, and (2) encouraging department and agency heads to build public resilience against disinformation under the guidance of the lead agency. Finally, content distribution platforms should be required to technically support a content provenance standard that identifies whether content is GenAI generated or modified. By appointing a trusted federal entity to monitor the platforms and enforce these mandates, the USG can use content provenance standards to encourage transparency and increase safety.

2. Domestic Regulatory Needs

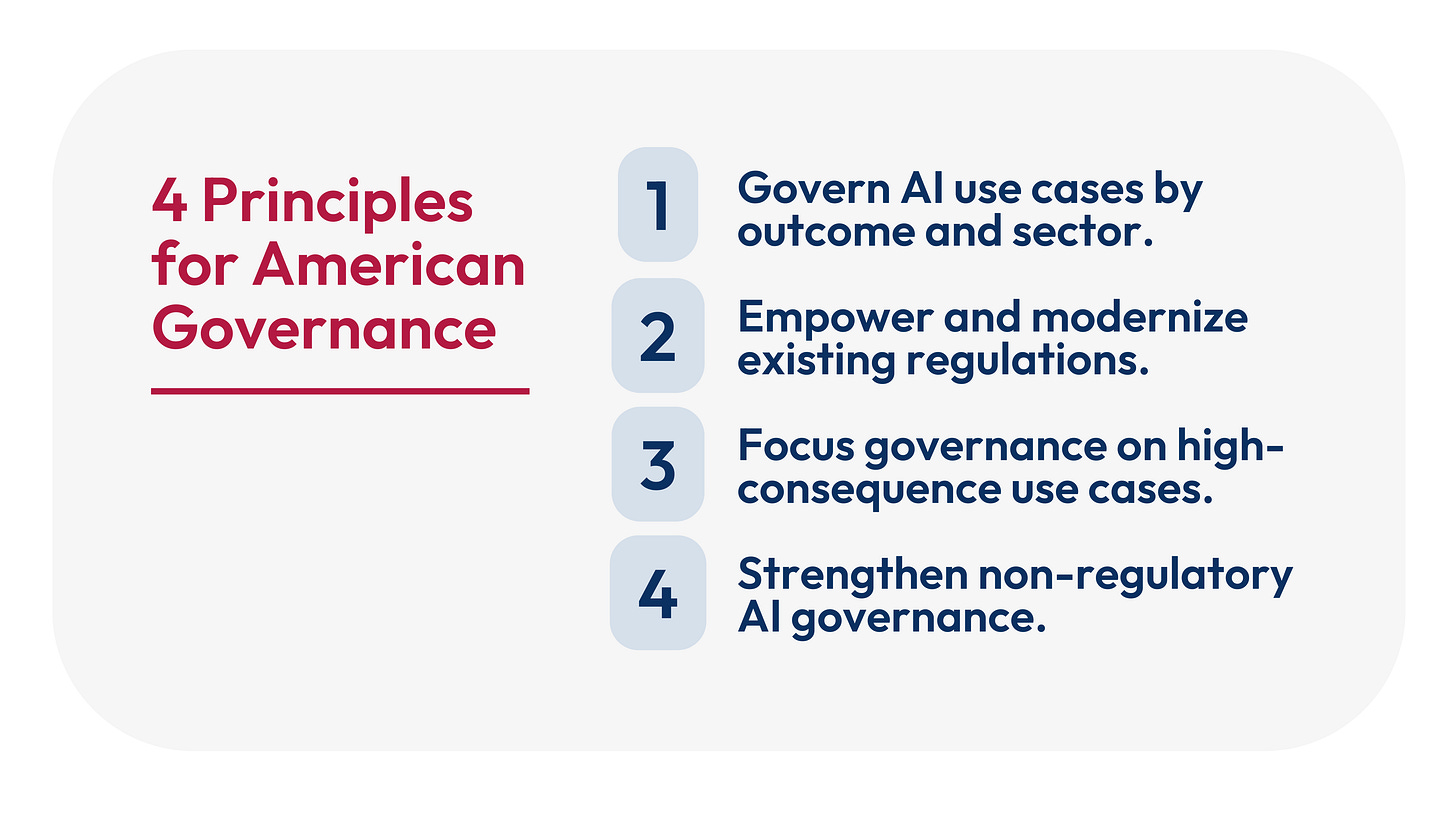

The United States should adopt a flexible AI governance model in accordance with four AI governance principles previously released by SCSP:

The United States has infrastructure in place to address many of the opportunities and challenges raised by AI. The U.S. government should leverage existing, robust regulatory mechanisms to govern GenAI use by sector. Importantly, regulatory bodies should be empowered with necessary skills and expertise to regulate AI.

Regulators will not be able to regulate every AI model or tool. Therefore, regulators should focus their efforts on AI use cases that are highly consequential to society. Specifically, regulators should focus on encouraging AI that has significant benefits and mitigating the worst of the harms. To do this, regulators need tools to identify these use cases - be they positive or negative. The White House Office of Science and Technology Policy (OSTP), in coordination with the Office of Management and Budget (OMB), or another equivalent government entity, should provide sector regulators with such guidance.

A framework for identifying highly consequential AI use should allow regulators flexibility to apply their sector-specific experience and expertise, but also be standardizable across agencies to provide industry and the public a level of certainty as to what AI uses will be considered highly consequential.

In the longer term, a centralized AI authority is needed to regulate AI issues that cut across sectors and fill regulatory gaps in sectors. Likewise, policy should align with core principles like transparency through requirements such as model cards and data sheets.

3. Digital Platforms from Countries of Concern

As we enter the runup to the 2024 election cycle, many US voters use foreign digital platforms from countries of concern. Some of these platforms (like Tik Tok) are simultaneously adopting GenAI capabilities. Given the potential for platforms to converge with GenAI risks around disinformation, this presents the novel risk that foreign governments or actors may have the ability to control or otherwise influence the content with which voters engage on specific platforms.

The United States should address threats posed by foreign digital platforms from countries of concern using a two-phase approach. First, in the immediate term, the federal government should consider narrow, product-specific restrictions on foreign digital platforms representing national security risks. Second, in the longer term, the United States should develop a more comprehensive risk-based policy framework to restrict foreign digital platforms from countries of concern. The framework should consider a suite of legislative, regulatory, and available economic options to mitigate harm from such platforms. The framework should pursue a comprehensive range of options that policy leaders could employ on a case-by-case basis and be developed simultaneously as policymakers introduce focused bans on platforms.

4. Governing Transnational Generative AI Challenges

The transnational nature of GenAI makes international mechanisms a necessary corollary to domestic governance actions. The intangible nature of GenAI technologies allows them to cut across borders, affecting both states’ sovereignty and many shared societal issues from social harms to legitimate law enforcement needs. To support the development of a common international foundation for GenAI regulation, the United States should establish a new multilateral and multi-stakeholder “Forum on AI Risk and Resilience” (FAIRR), under the auspices of the G20.

FAIRR would convene three verticals focused on preventing non-state malign GenAI use for nefarious ends (e.g., criminal activities or acts of terrorism), mitigating the most consequential, injurious GenAI impacts on society (e.g., illegitimate discriminatory impacts due to system bias), and managing GenAI use that infringes on other states’ sovereignty (e.g., foreign malign influence operations or the use of AI tools in cyber surveillance). To achieve this, FAIRR would bring together relevant stakeholders — including national officials, regulators, relevant private sector companies, and academia/civil society — to work in a soft law fashion toward interoperable standards and rules that domestic regulators can independently implement.

We have reached a critical juncture where policy action must match the pace and promise of AI advancement. The consequences of inaction or overreaction are real and imminent. By balancing innovation and regulation and harnessing the opportunities of GenAI as described above, we can deliver on the promise of an efficient democratic framework for the management of GenAI.

For the United States to lead in GenAI technologies, it must put forth a democratic model for governing GenAI worthy of emulation across the world.

You can read more detailed versions of these recommendations in our full report.